Work with Reality Composer Pro content in Xcode

Description: Learn how to bring content from Reality Composer Pro to life in Xcode. We’ll show you how to load 3D scenes into Xcode, integrate your content with your code, and add interactivity to your app. We’ll also share best practices and tips for using these tools together in your development workflow. To get the most out of this session, we recommend first watching “Meet Reality Composer Pro” and “Explore materials in Reality Composer Pro" to learn more about creating 3D scenes.

Chapters:

0:00 - Introduction

2:37 - Load 3D content

6:27 - Components

12:00 - User Interface

27:51 - Play audio

30:18 - Material properties

33:25 - Wrap-up

Reality Composer Pro

Reality Composer Pro is a developer tool for preparing RealityKit content to be used in spatial computing apps.

The editor UI and features of Reality Composer Pro are covered in the sessions:

Explore materials in Reality Composer Pro - WWDC23

Meet Reality Composer Pro - WWDC23

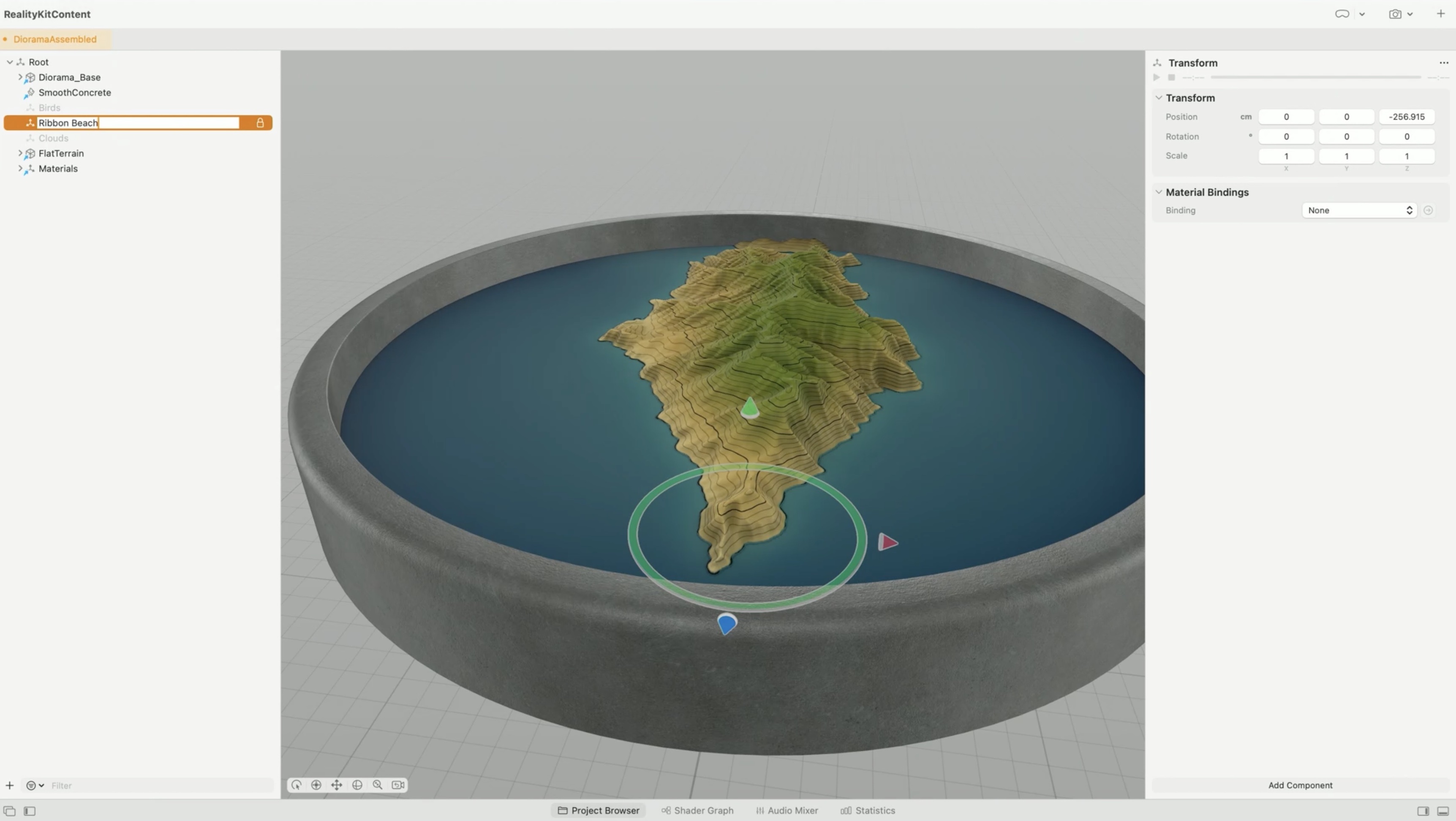

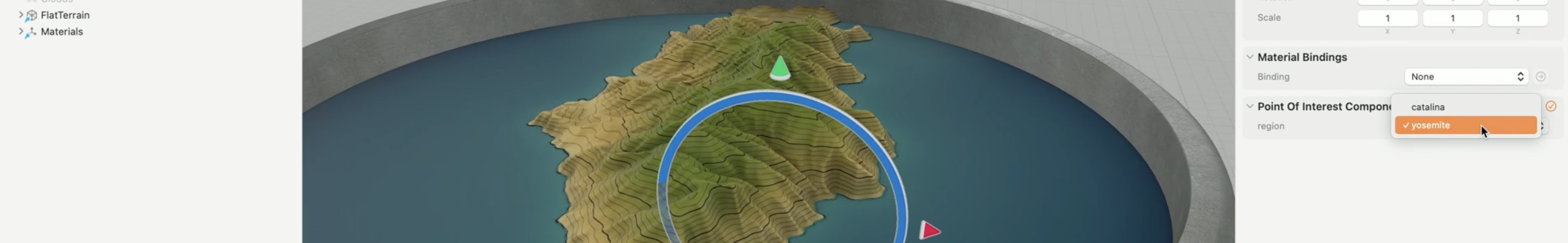

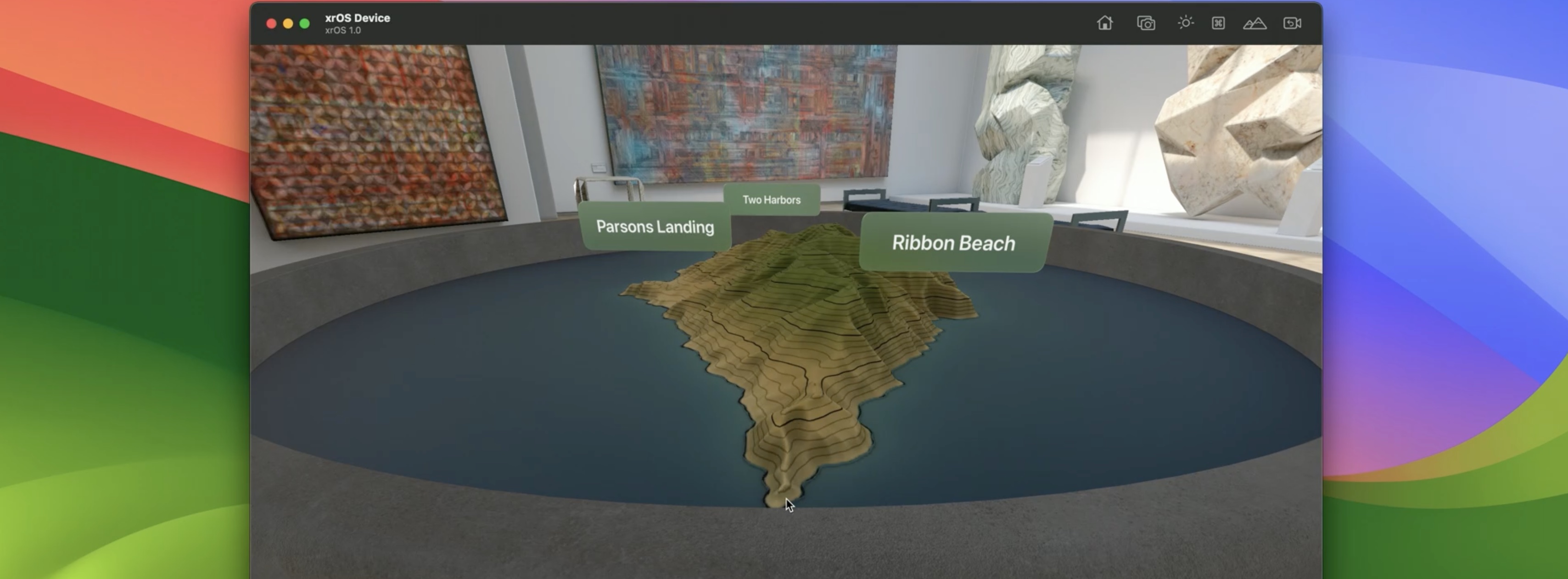

We're looking at a topographical map of Yosemite National Park.

We've added a slider to morph between two different California landmarks. Now we're looking at Catalina Island off the coast of Los Angeles.

We also have hovering 2D SwiftUI buttons positioned in 3D space to learn more about various points of interest in that map.

Load 3D content

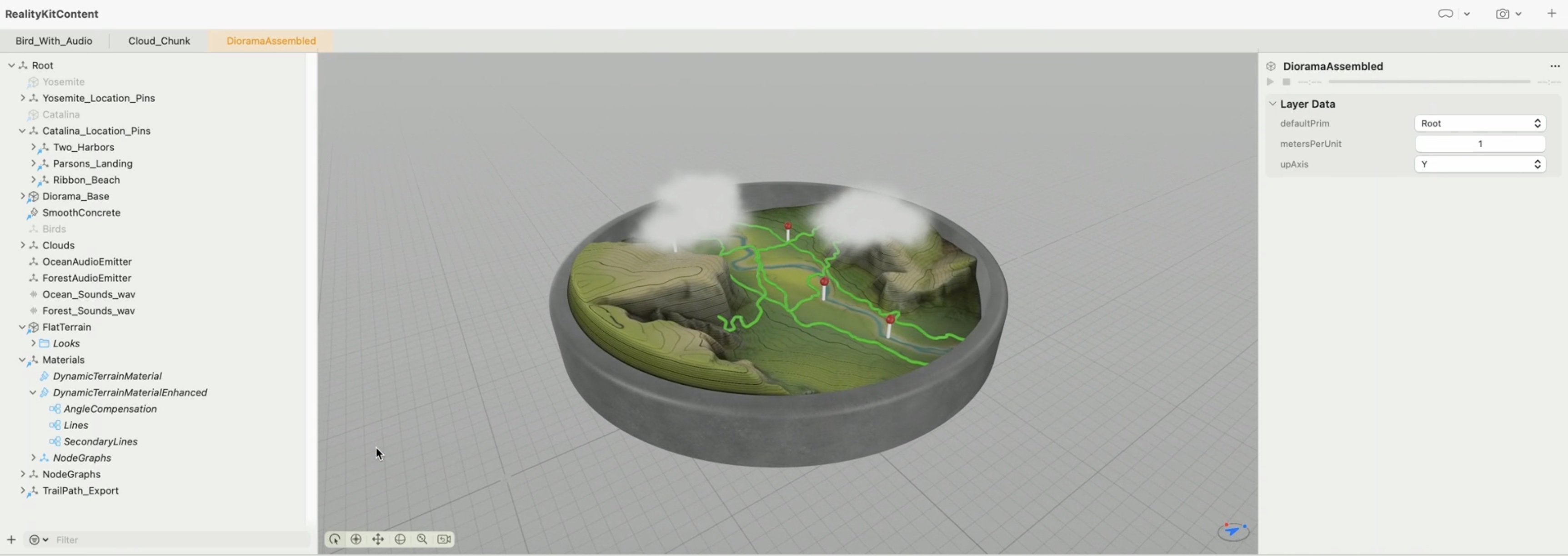

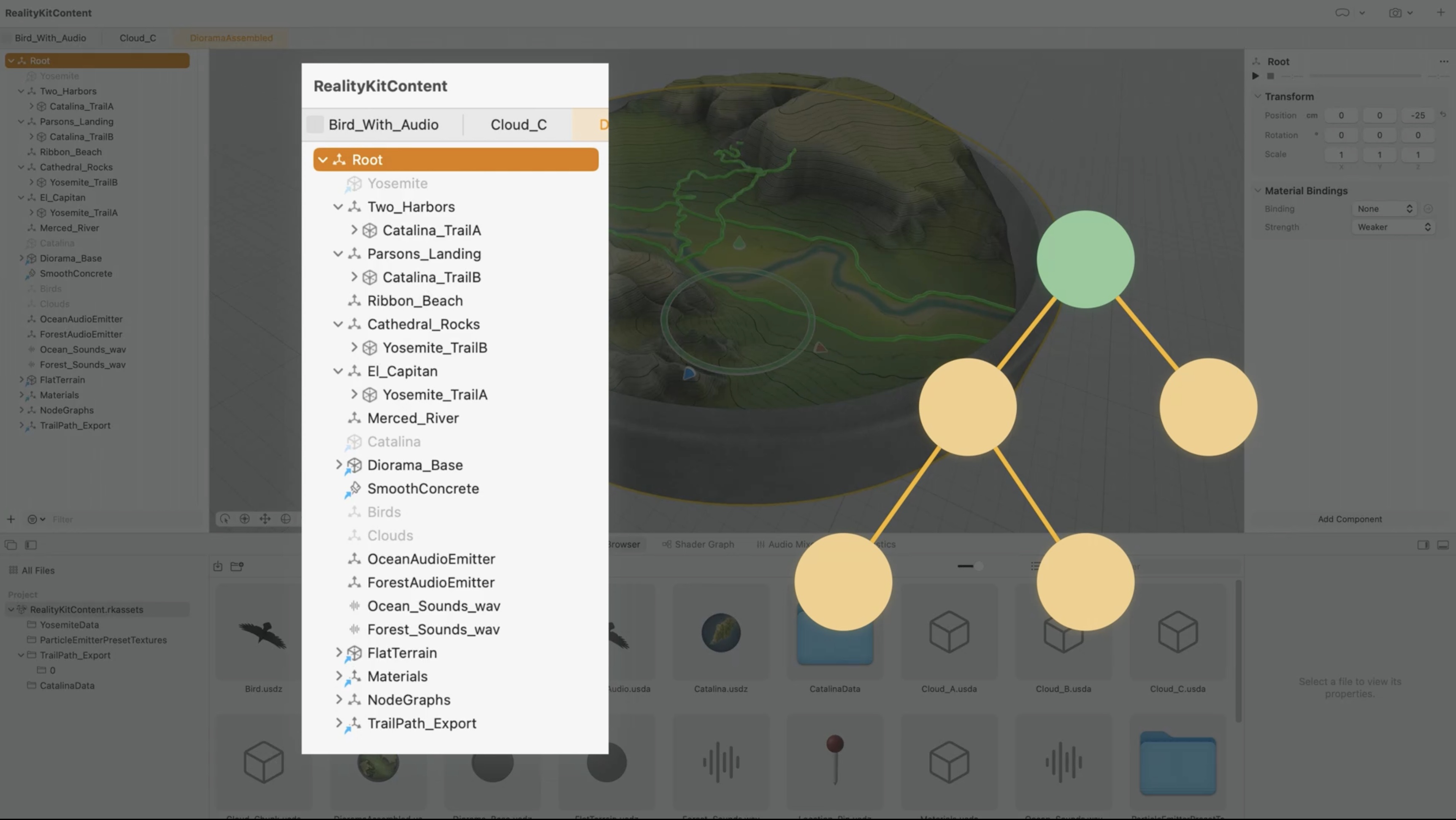

In the above sessions, Apple made a Reality Composer Pro project that contains all the assets for a diorama. These tabs at the top each represent one root entity to be loaded at runtime.

Let's load this scene named DioramaAssembled at runtime.

We use entity's asynchronous initializer to make us an entity with the contents from our Reality Composer Pro package.

let entity = try await Entity(named: "DioramaAssembled", in: realityKitContentBundle)

We specify which entity we want to load using its string name, and we give it the bundle that our package produces. It will throw if it can't find anything in our Reality Composer Pro project by that name. realityKitContentBundle is a constant value that it gets autogenerated in the Reality Composer Pro package. This goes in a RealityView make closure. A RealityView is a new kind of SwiftUI view.

It's the bridge between the worlds of SwiftUI and RealityKit.

RealityView { content in

do {

let entity = try await Entity(named: "DioramaAssembled", in: realityKitContentBundle)

content.add(entity)

} catch {

// Handle error

}

}

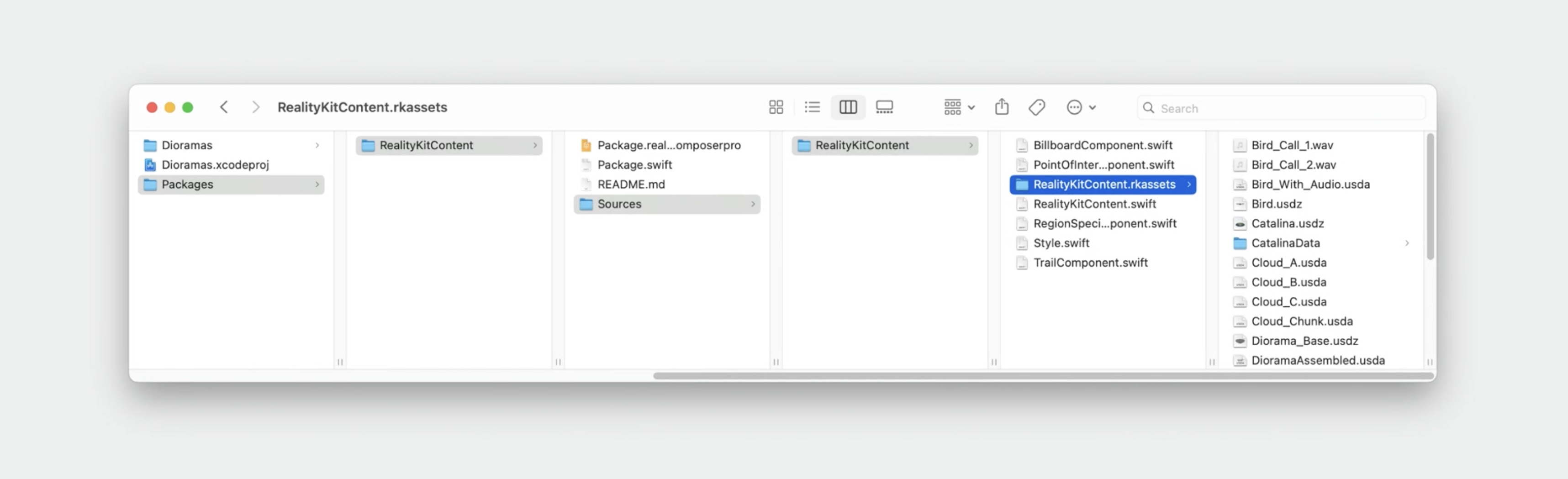

Anatomy of a Reality Composer Pro package

Put assets into a Swift Package, with an .rkassets directory inside it, like this.

Xcode compiles the .rkassets folder into a format that's faster to load at runtime.

The entity we just loaded is actually the root of a larger entity hierarchy. It has child entities and they in turn have child entities. If we wanted to address one of the entities lower down in the hierarchy, we could give it a name in Reality Composer Pro, and then at runtime, we could ask the scene to find that entity by its name.

Entity Component System.

Entities are a part of ECS, which stands for Entity Component System.

ECS has some close parallels to object-oriented programming but is different in some key ways. In the object-oriented programming world, the object has properties which are attributes that define its nature, and it has its own functionality written in a class that defines the object.

### Entity In the ECS world, an entity is any thing you see in the scene. They can also be invisible. They don't hold attributes or data though. We put our data into components instead.

Components

Components can be added to or removed from entities at any time during app execution, which provides a way to dynamically change the nature of an entity.

System

A system is where behavior lives. It has an update function that's called once per frame. That's where we put our ongoing logic. In the system, we query for all entities that have a certain component on them, or configuration of components, and then we perform some action and store the updated data back into those components.

For a more in-depth discussion on ECS, check out:

Dive into RealityKit 2 - WWDC21

Build spatial experiences with RealityKit - WWDC23

Components

To add a component to an entity in Swift, we use entity.components.set() and provide the component value.

let component = MyComponent()

entity.components.set(component)

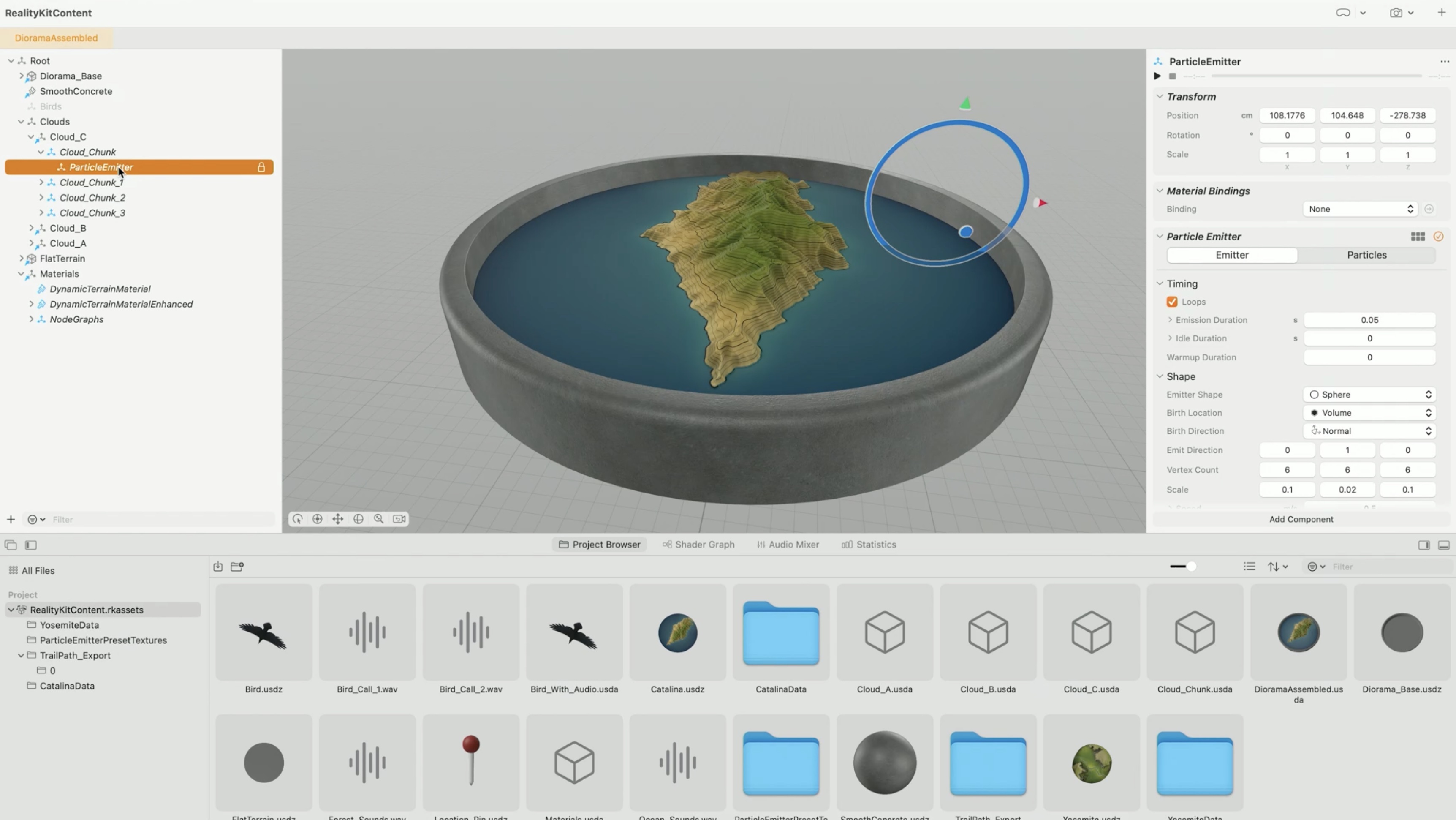

To do the same in Reality Composer Pro, select the entity you want either in the viewport or in the hierarchy.

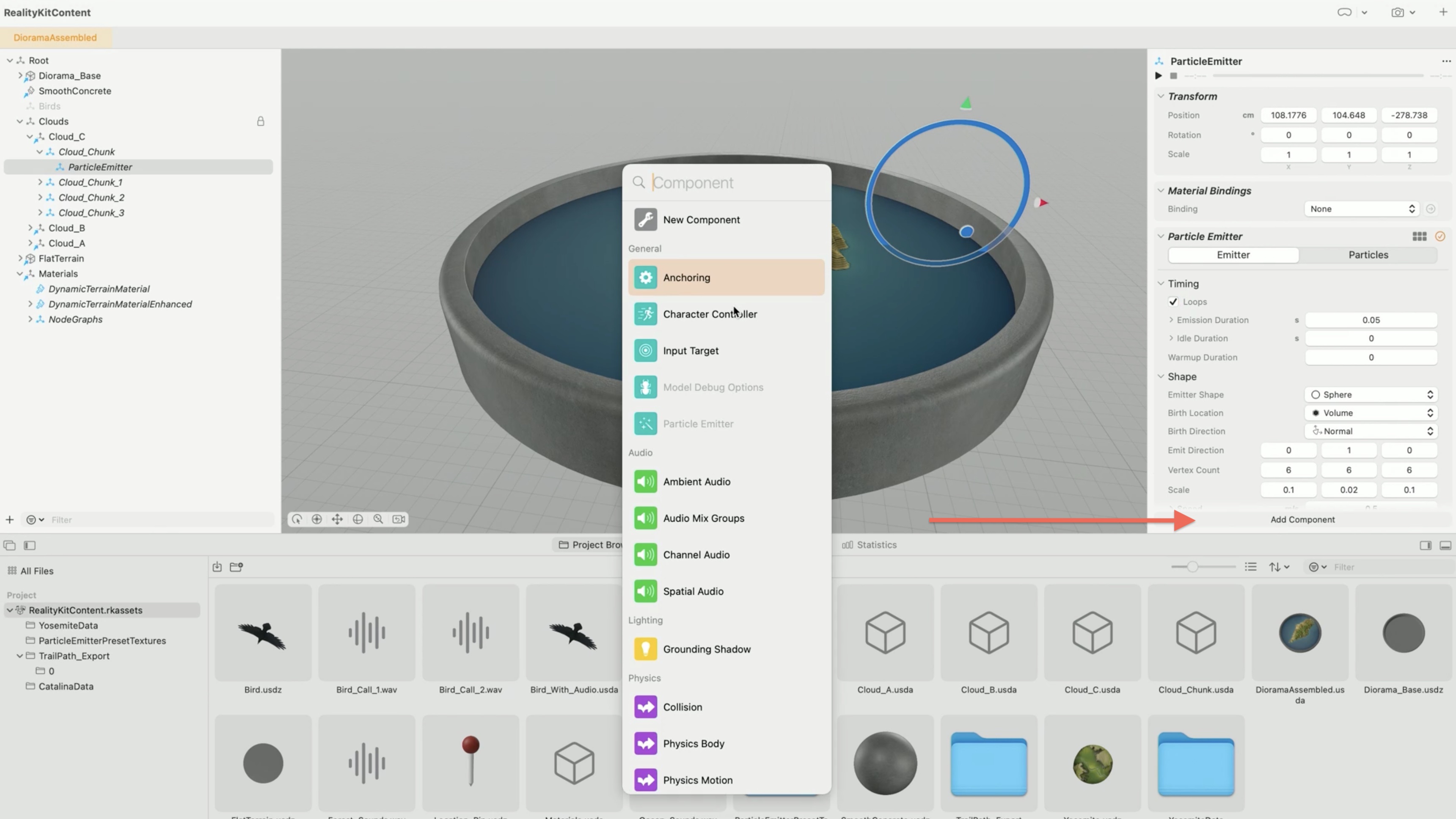

Then, at the bottom of the Inspector Panel, click the Add Component button to bring up a list of all RealityKit's available components.

We can add as many components to an entity as we want, and we can only add one of each type; it's a set. We'll also see any custom components we've made in this list as well. Let's create our own custom components.

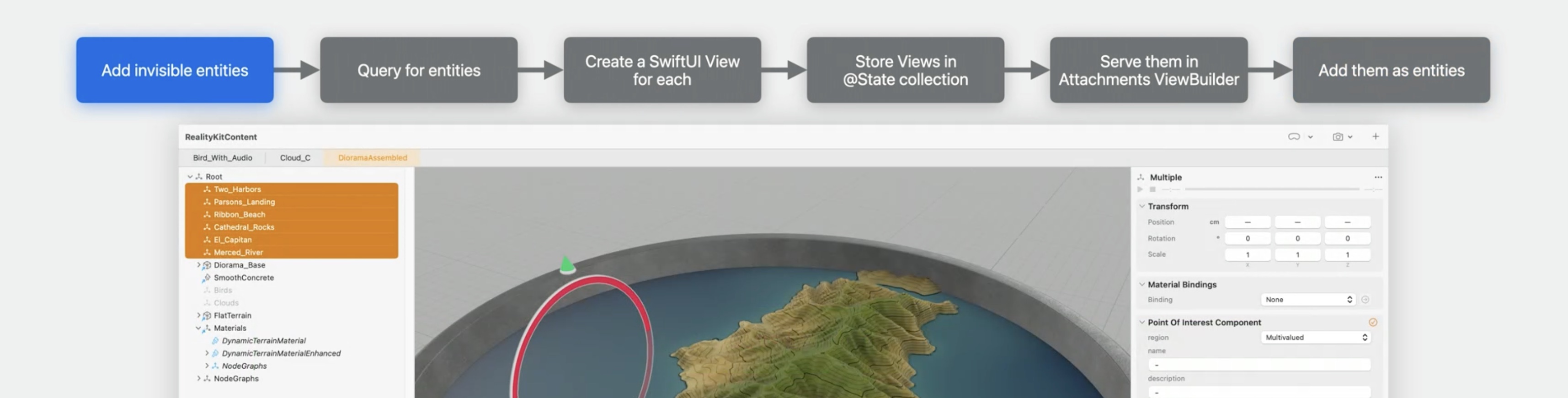

We're going to make those floating buttons that hover over specific points on our terrain. We'll prepare a lot of that UI and functionality in code, but we will mark these entities in Reality Composer Pro.

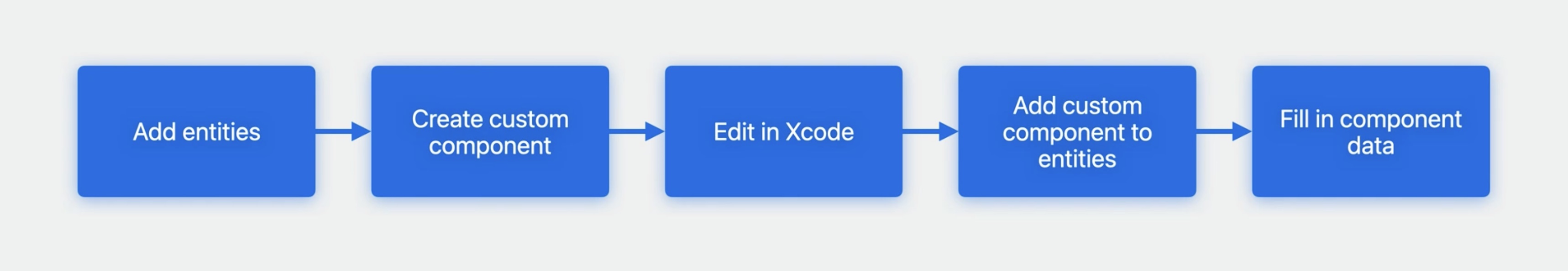

To do this, we're going to - add entities at locations above our terrain map, which will signify to the app that these are the places we want to show our floating buttons. - create a point of interest component to house our information about each place. - open the PointOfInterestComponent.swift in Xcode to edit it, adding properties like a name and a description. - In Reality Composer Pro, we'll add our new PointOfInterestComponent to each of our new entities - fill in the properties' values.

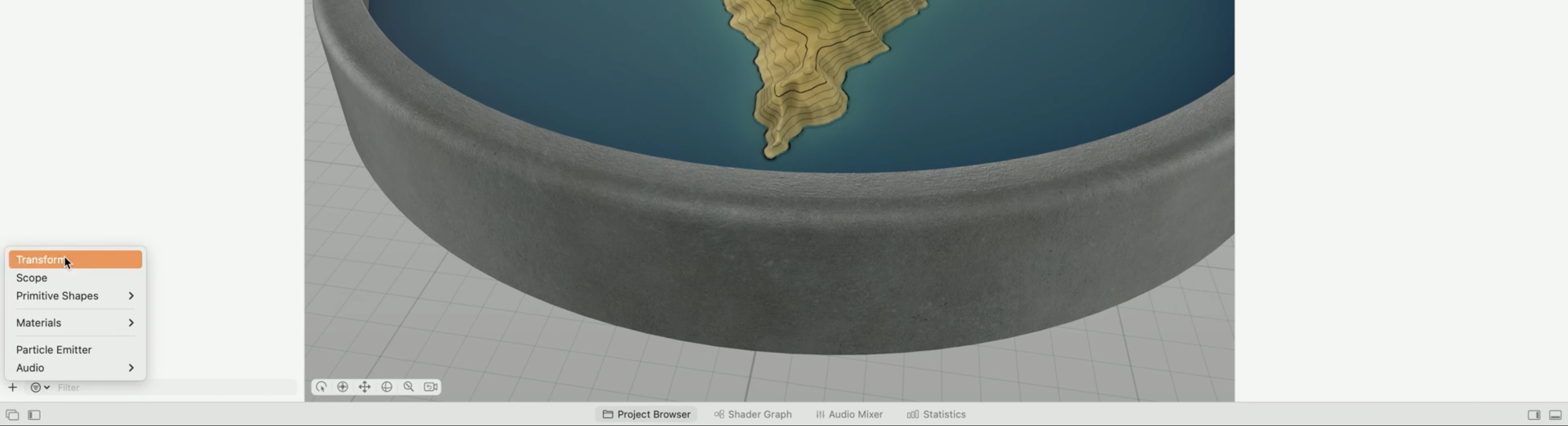

Let's make our first location marker entity, Ribbon Beach, which is a place on Catalina Island. We click the plus menu and select Transform to make us a new invisible entity.

We can name our entity Ribbon_Beach.

Let's put this entity where Ribbon Beach actually is on the island.

[component7]: ../../../images/notes/wwdc23/10273/component7.jpg

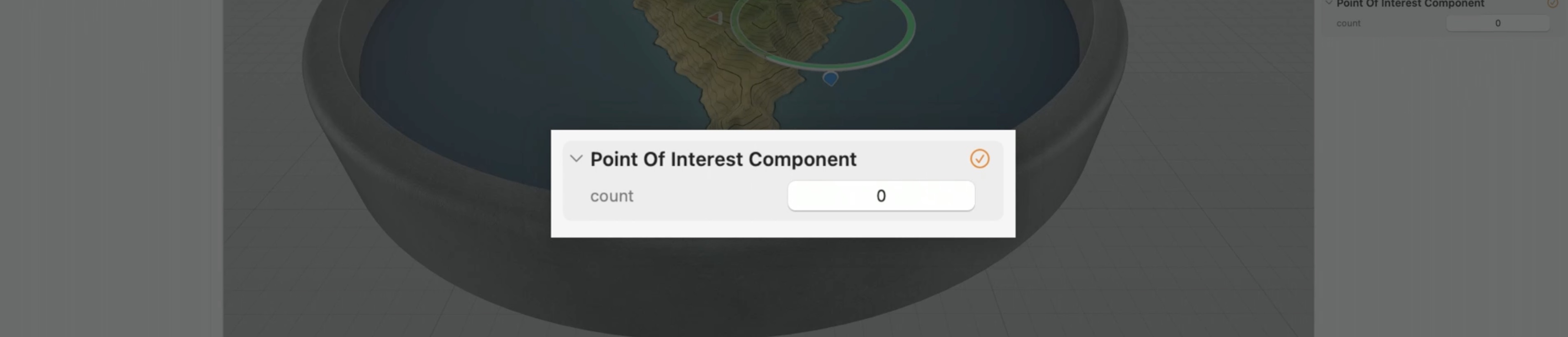

We click on the Add Component button, but this time, we select New Component because we're going to make our own. Let's give it a name, PointOfInterest.

[component8]: ../../../images/notes/wwdc23/10273/component8.jpg

Now it shows up in the Inspector Panel just like our other components do. But what's this count property?

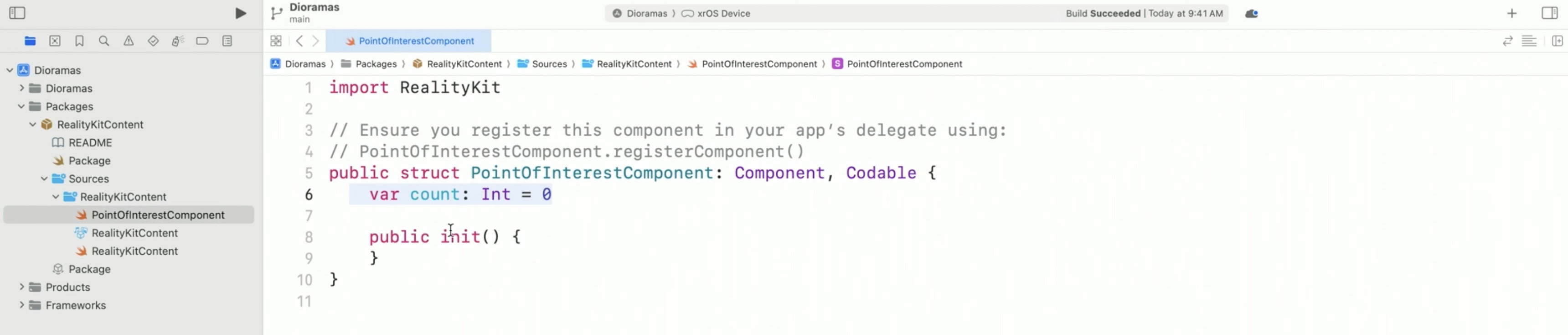

Let's open our new component in Xcode. In Xcode, we see that Reality Composer Pro created PointOfInterestComponent.swift for us.

Reality Composer Pro projects are Swift packages, and the Swift code we just generated lives here in the package. Looking at the template code, we see that that's where the count property came from.

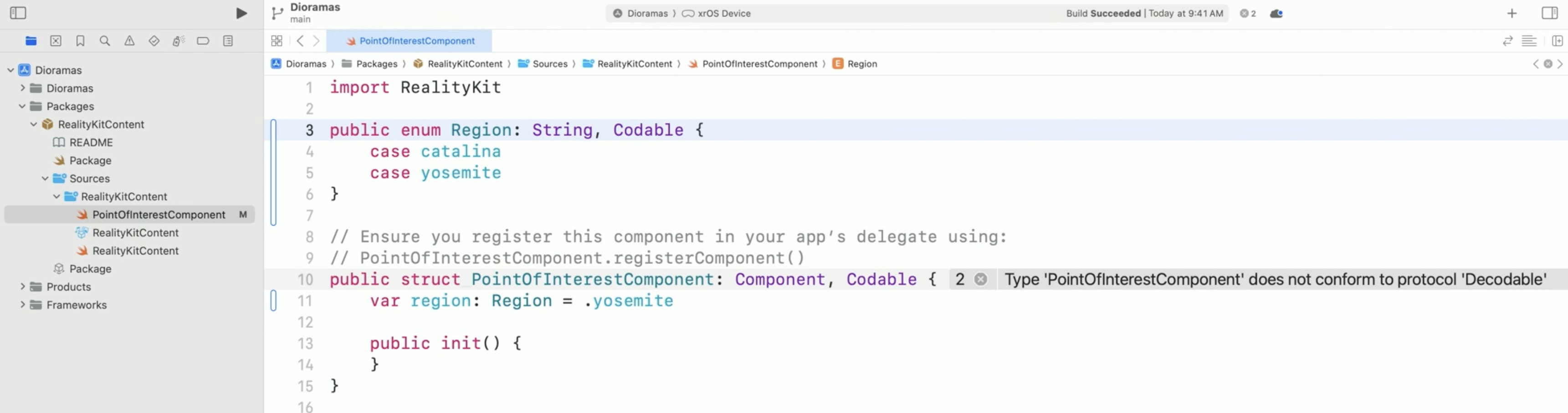

Let's have another property instead. We want each point of interest to know which map it's associated with so that when you change maps, we can fade out the old points of interest and fade in the appropriate ones. So we add an enumeration property, var region. Let's make our enum region up here... ...and give it two cases, since we're only building two maps right now: Catalina and Yosemite. It can serialize as a string. We also conform it to the Codable protocol so that Reality Composer Pro can see it and serialize instances of it.

import RealityKit

public enum Region: String, Codable {

case catalina

case yosemite

}

// Ensure you register this component in your app's delegate using:

// PointOfInterestComponent.registerComponent ()

public struct Point0fInterestComponent: Component, Codable {

var region: Region = .yosemite

public init() {

}

}

Back in Reality Composer Pro, the count property has gone away and our new region property shows up. It has a default value of yosemite because that's what we initialized in the code, but we can override it here for this particular entity.

If we override it, this value will only take effect on this particular entity. The rest of the point of interest components will have the default value of yosemite unless we override them too.

We're using our PointOfInterestComponent like a signifier, a marker that we stick on these entities. These entities act like placeholders for where we'll put our SwiftUI buttons at runtime. We add our other Catalina Island points of interest the same way we just added Ribbon Beach.

The app doesn't do anything yet. That's because we haven't written any code to handle these point of interest components yet.

User interface

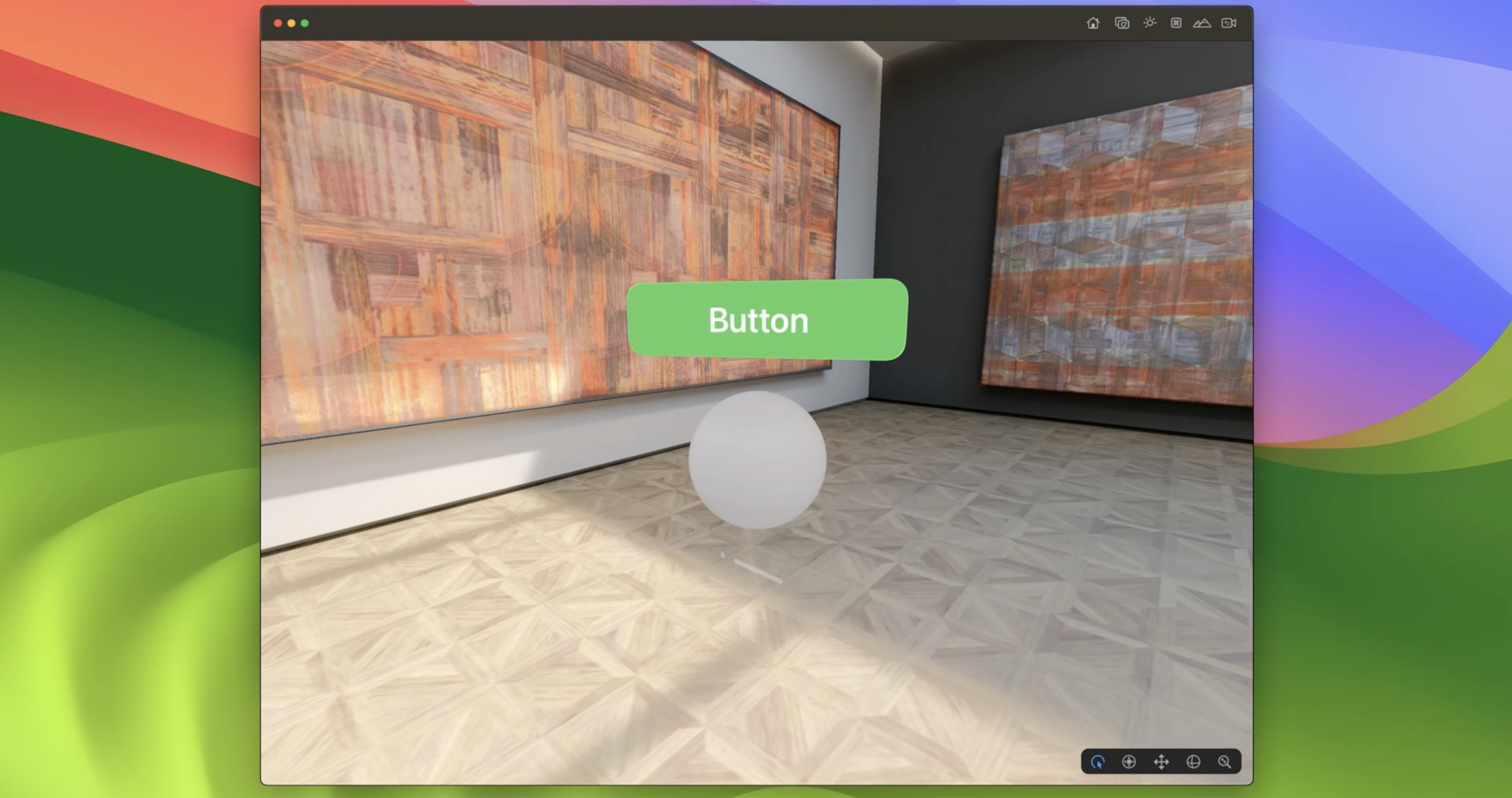

There is a new way of putting SwiftUI content into a RealityKit scene. This is called the Attachments API. We're going to combine attachments with our PointOfInterestComponent to create hovering buttons with custom data at runtime.

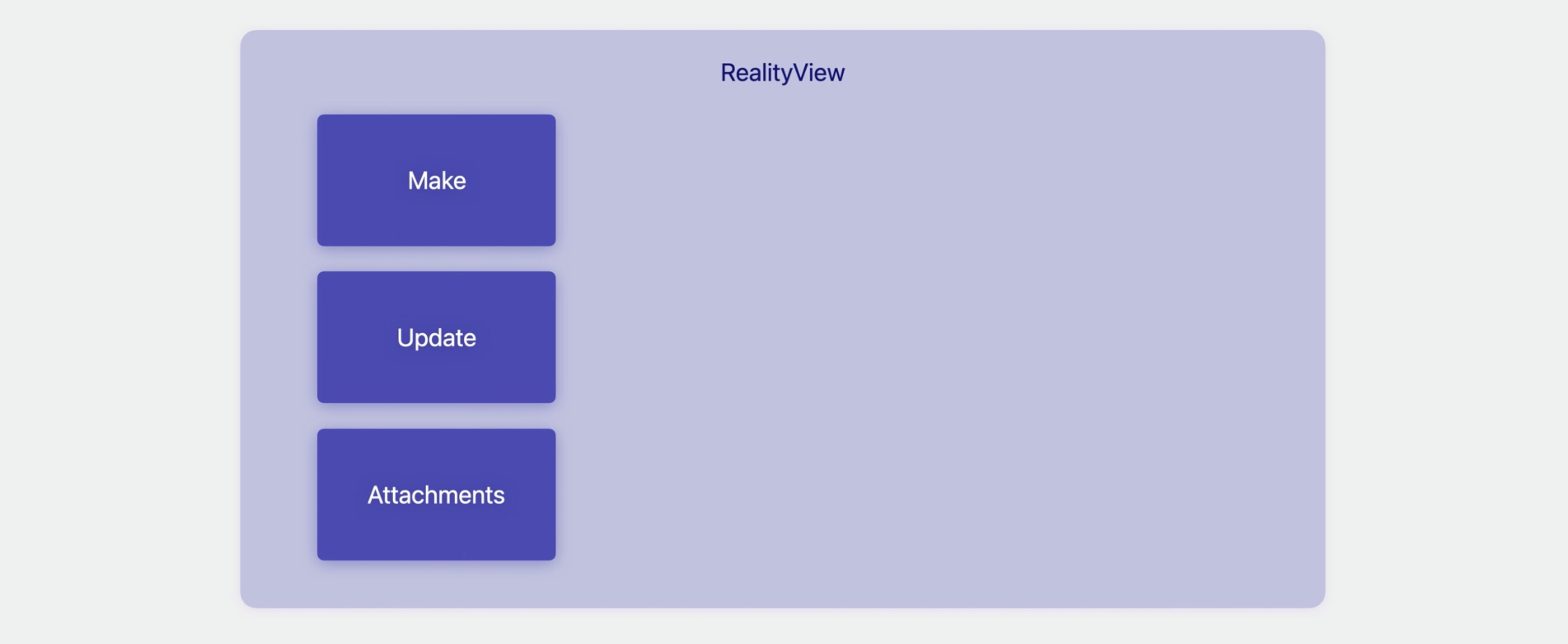

Attachments are a part of the RealityView. We first look at a simplified example:

RealityView { _,_ in

// load entities from your Reality Composer Pro package bundle

} update: { _,_ in

// update your RealityKit entities

} attachments: {

// SwiftUI Views

}

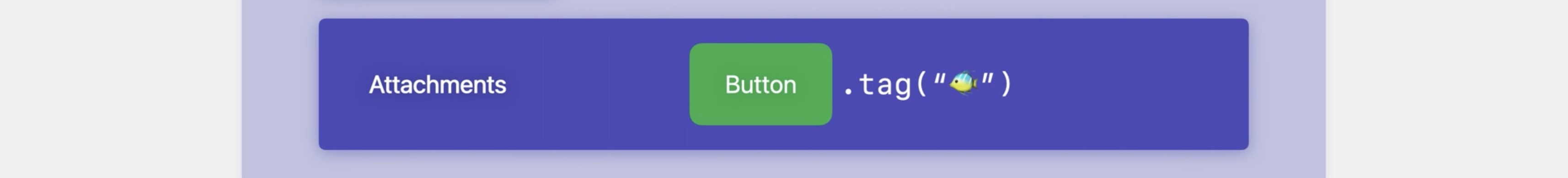

The RealityView initializer takes three parameters: a make closure, an update closure, and an attachments ViewBuilder. We now add a bare-minimum implementation of creating an Attachment View, a green SwiftUI button, and adding it to our RealityKit scene.

In the Attachments ViewBuilder, we make a normal SwiftUI view; we can use view modifiers and gestures and all the rest that SwiftUI gives us. We tag our View with any unique hashable. I've chosen to tag this button view with a fish emoji.

RealityView { _,_ in

// load entities from your Reality Composer Pro package bundle

} update: { _,_ in

// update your RealityKit entities

} attachments: {

Button { ... }

.background(.green)

.tag ("🐠")

}

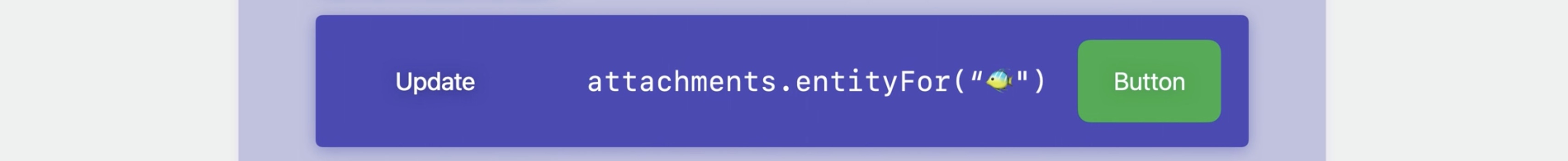

Later, when SwiftUI invokes the update closure, our button View has become an entity. It's stored in the attachments parameter to this closure, and we get it using the tag we gave it before. We can then treat it like any other entity, add it as a child of any existing entity in our scene, or add it as a new top-level entity in the content's entities collection.

RealityView { _,_ in

// load entities from your Reality Composer Pro package bundle

} update: { _,_ in

if let attachmentEntity = attachments.entity(for: "S") {

content.add(attachmentEntity)

}

} attachments: {

Button { ... }

.background(.green)

.tag ("🐠")

}

And since it's become a regular entity, we can set its position so it shows up where we want in 3D, and we can add any components we want as well.

RealityView attachments

Here's how data flows from one part of the RealityView to another.

The three parameters of this RealityView initializer:

- The first is make, where the initial set-up scene from your Reality Composer Pro bundle is loaded as an entity and then added to the RealityKit scene.

- The second is update, which is a closure that will be called when there are changes to the view's state. Here, we can change things about the entities, like properties in their components, their position, and even add or remove entities from the scene. This update closure is not executed every frame. It's called whenever the SwiftUI view state changes.

- The third is the attachments ViewBuilder. This is where we can make SwiftUI views to put into your RealityKit scene.

SwiftUI views start out in the attachments ViewBuilder, then they are delivered in the update closure in the attachments parameter.

Here, asking the attachments parameter if it has an entity using the same tag we gave to the button in the attachments ViewBuilder.

If there is one, we get a RealityKit entity. In the update closure, we set its 3D position and add it to your RealityKit scene so we can see it floating in space wherever we want.

Here, we've added the button entity as a child of a sphere entity positioned 0.2 meters above its parent.

The make closure also has an attachments parameter. This one is for adding any attachments that we have ready to go at the time this view is first evaluated, because the make closure is only run once.

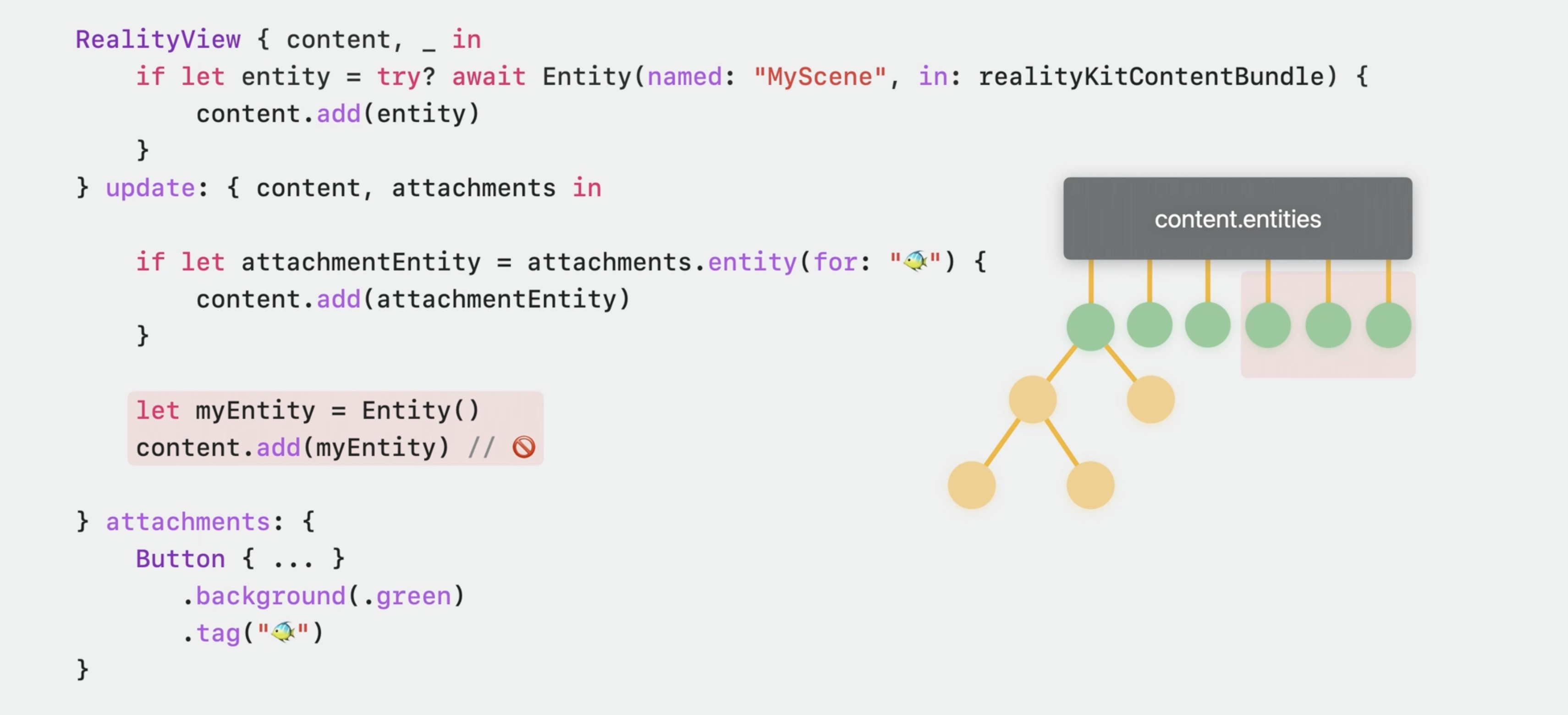

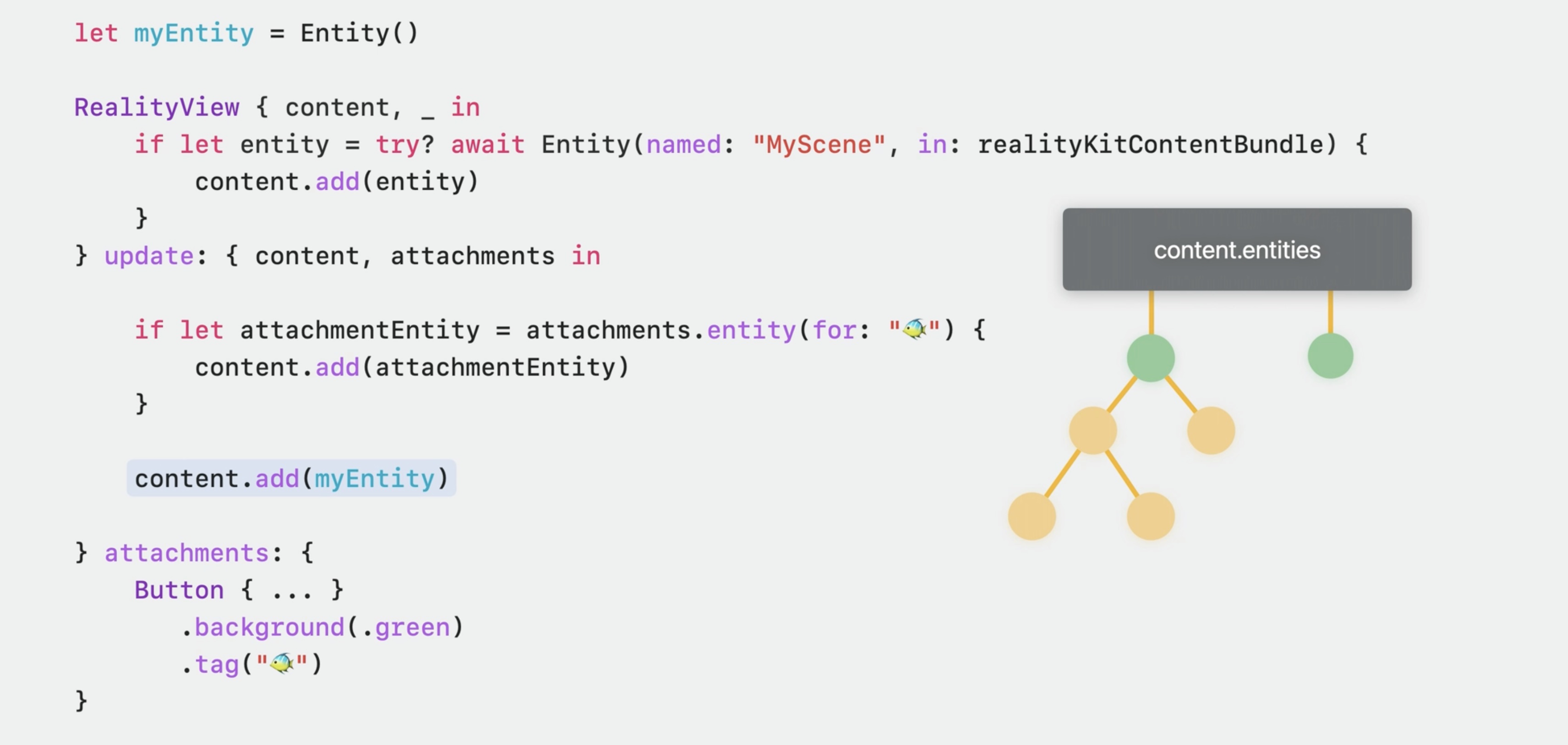

This is the general flow of a RealityView, let's check the update closure. The parameter to make and update closures is a RealityKitContent. When adding an entity to the RealityKit content, it becomes a top-level entity in the scene.

Likewise, from the update function, adding an entity to the content gives a new top-level entity in the scene. While the make closure will only be called once, the update closure will be called more than once. If we create a new entity in the update closure and add it to the content there, we'll get duplicates of that entity. (not good.)

To guard against that, we should only add entities to content that are created somewhere that's only run once. We don't need to check if the content.entities already contains the entity.

It's a no-op if we call add with the same entity twice, like a set. It's the same when parent an entity to an existing entity in the scene -- it won't be added twice.

let myEntity = Entity()

RealityView { content, in

if let entity = try? await Entity(named: "MyScene", in: realityKitContentBundle){

content.add(entity)

}

} update: { content, attachments in

if let attachmentEntity = attachments.entity(for: "🐠") {

content.add(attachmentEntity)

}

content.add(myEntity)

} attachments: {

Button { ... }

.background(.green)

.tag("🐠")

}

Attachment entities are not created by us; they're created by the RealityView for each attachment view that we provide in your attachments ViewBuilder. That means it's safe to add them to the content in our update closure without checking if it's already there.

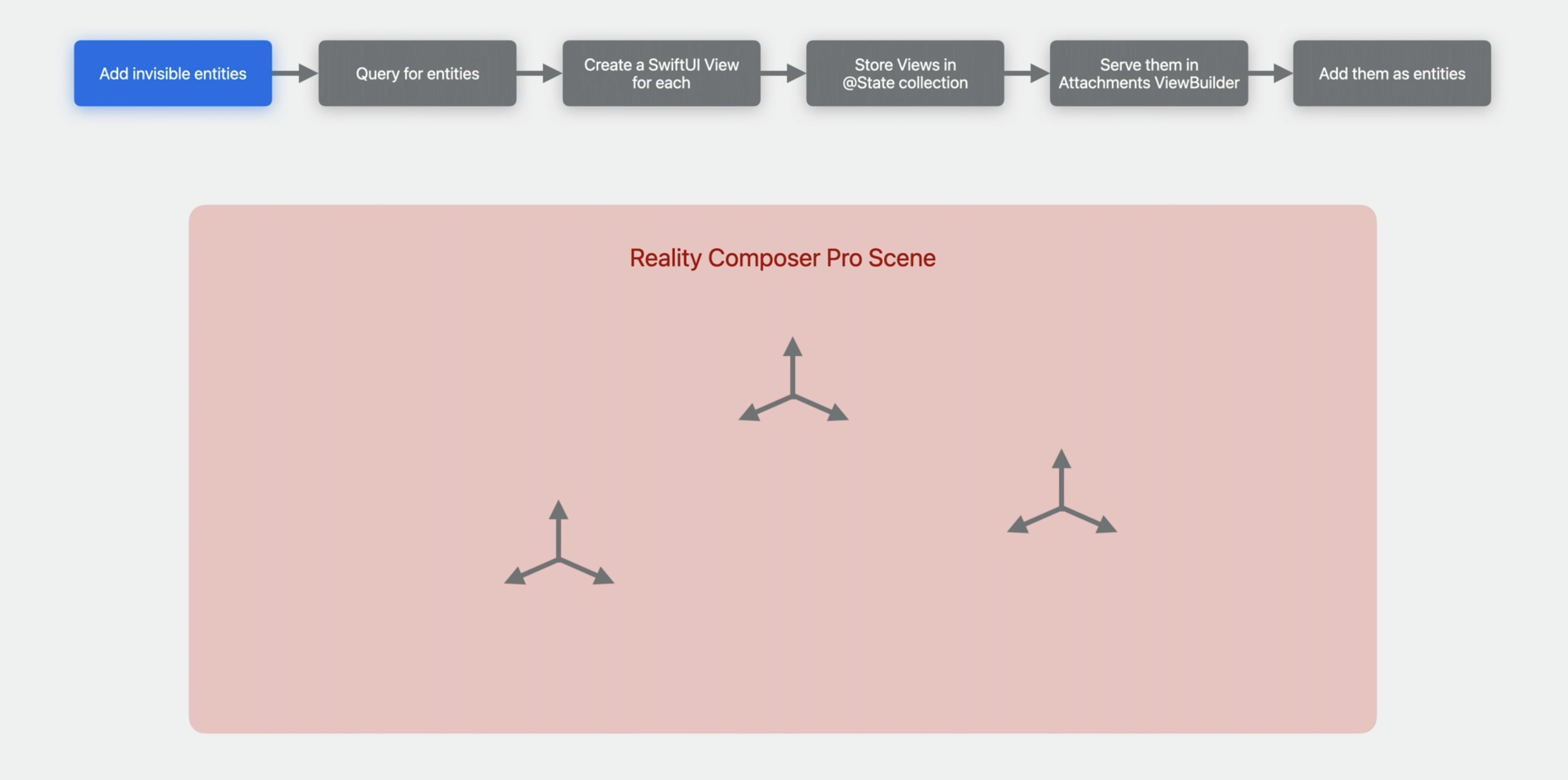

Data-driven

So, that was how we'd write code if we wanted to hardcode our points of interest in the attachments ViewBuilder. But let's make it more flexible.

To make it data-driven, we need the code to read the data that we set up in the Reality Composer Pro scene. We'll be creating our attachment views dynamically.

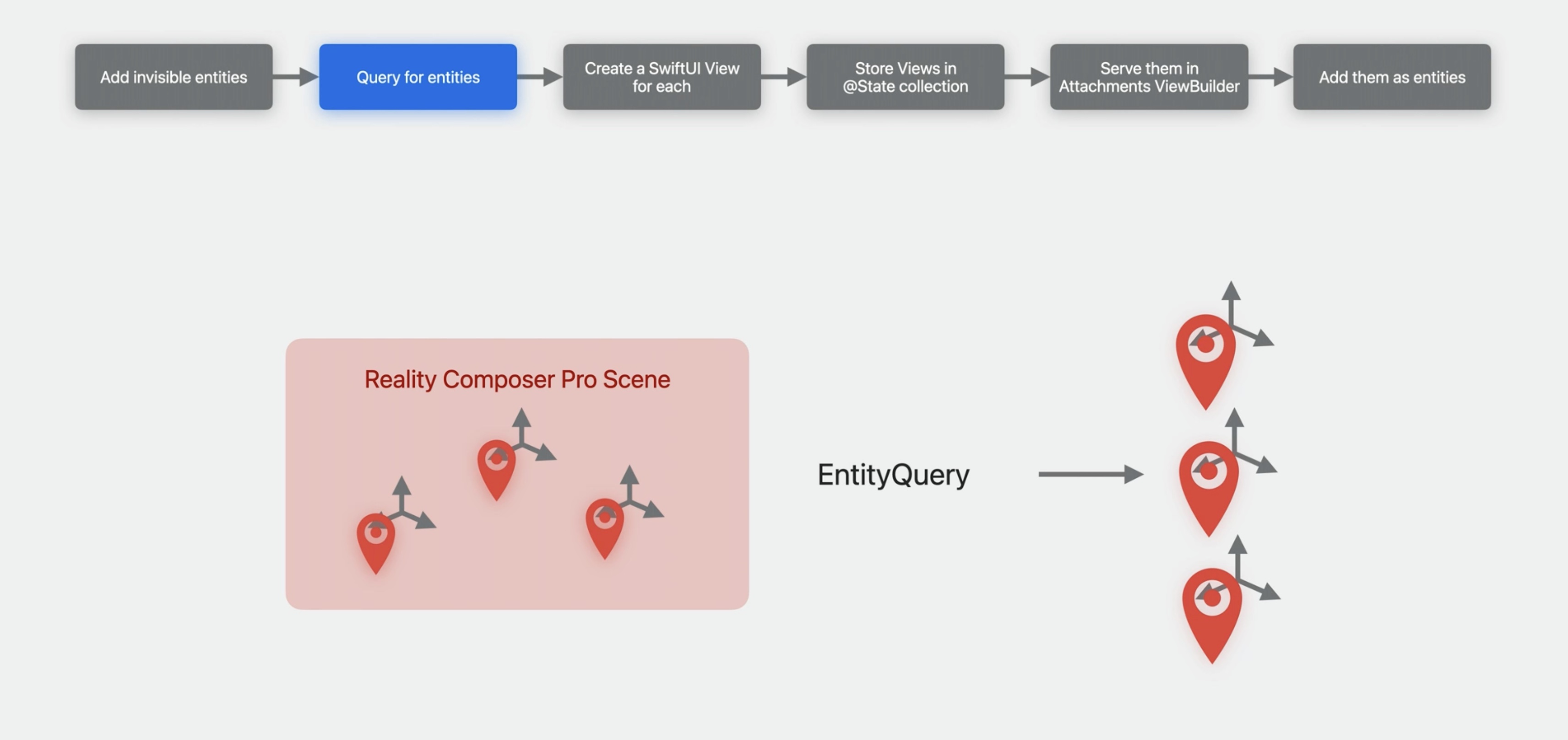

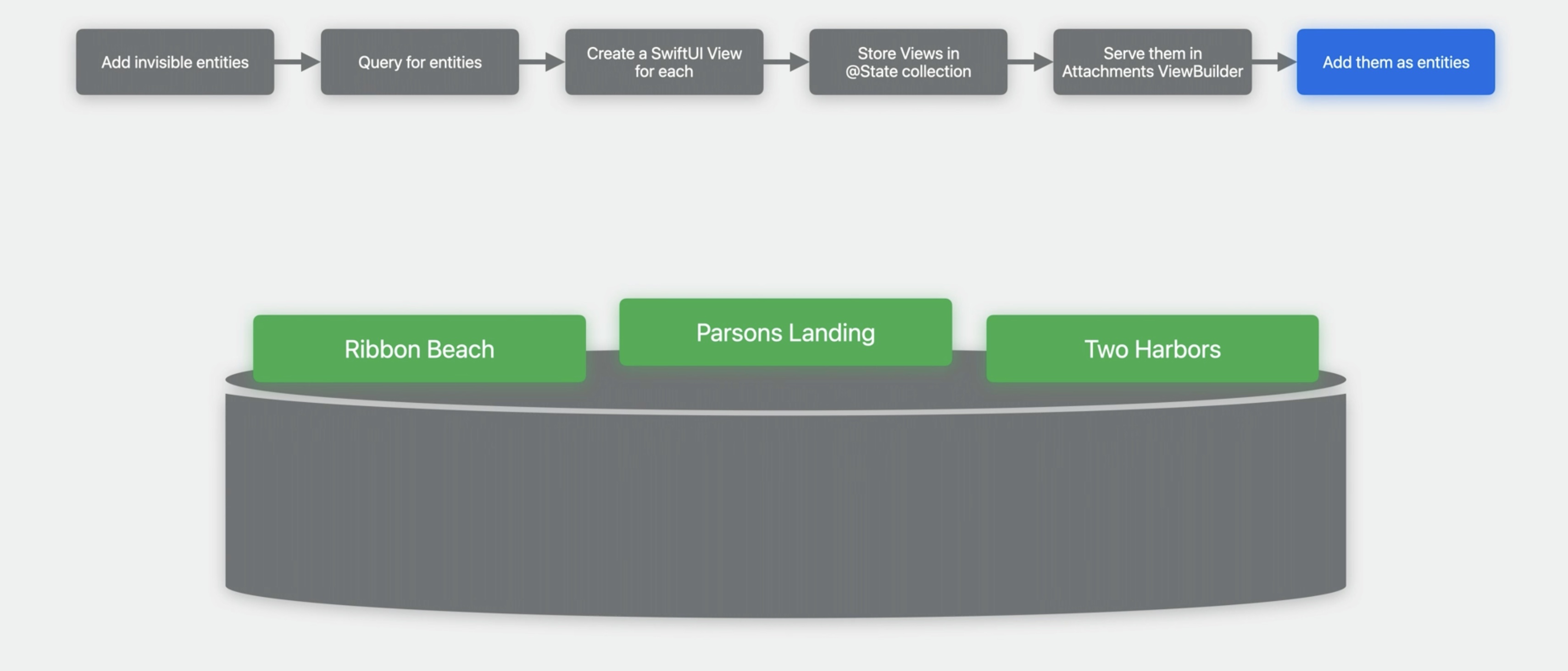

- Add invisible entities In Reality Composer Pro, we already set up our placeholder entity for Ribbon Beach, and we'll do the same for the other points of interest that we want to highlight in our diorama. We'll fill out all the info each one needs, like their name and which map they belong on.

- Query for entities Now, in code, we'll query for those entities

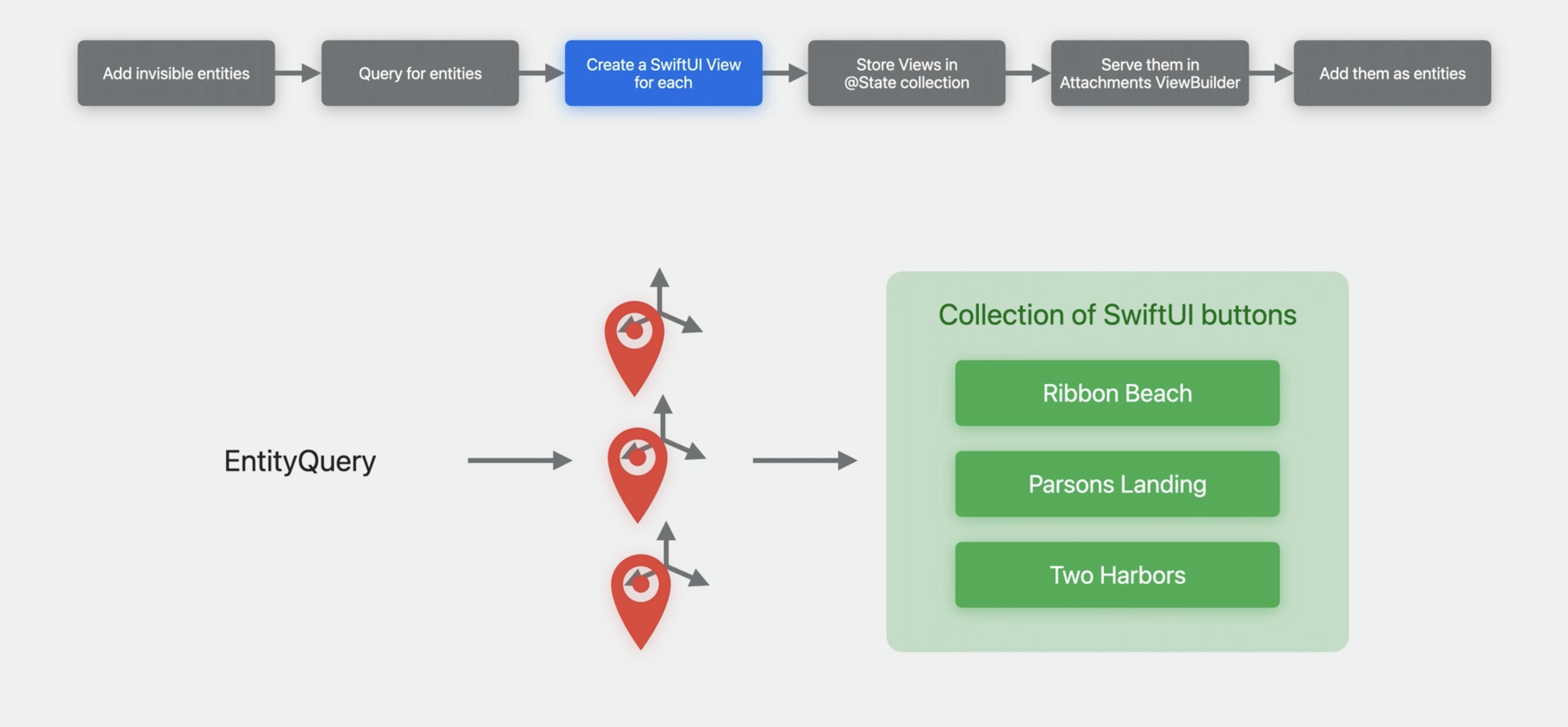

- Create a SwiftUI View for each

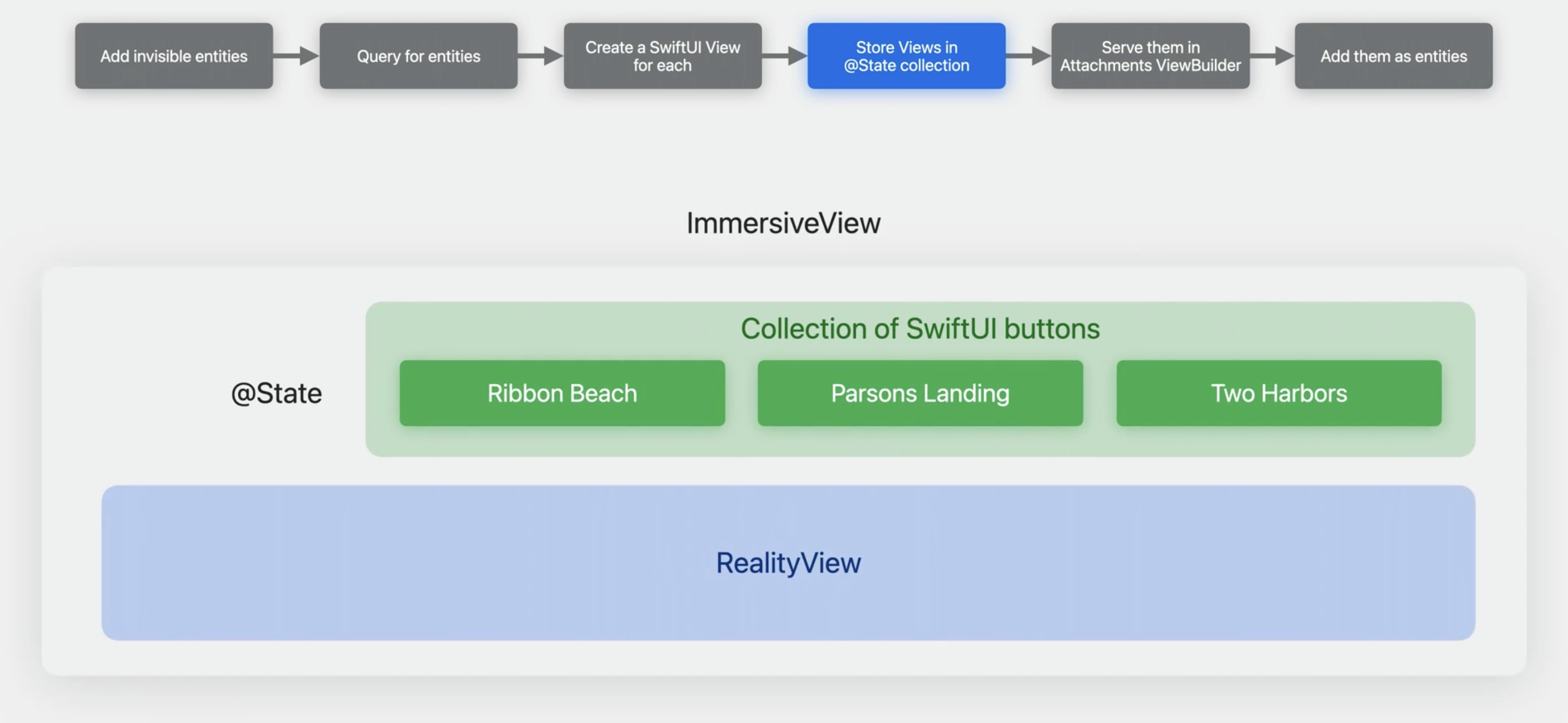

- Store Views in @State collection In order to get SwiftUI to invoke our attachments ViewBuilder every time we add a new button to our collection, we'll add the @State property wrapper to this collection.

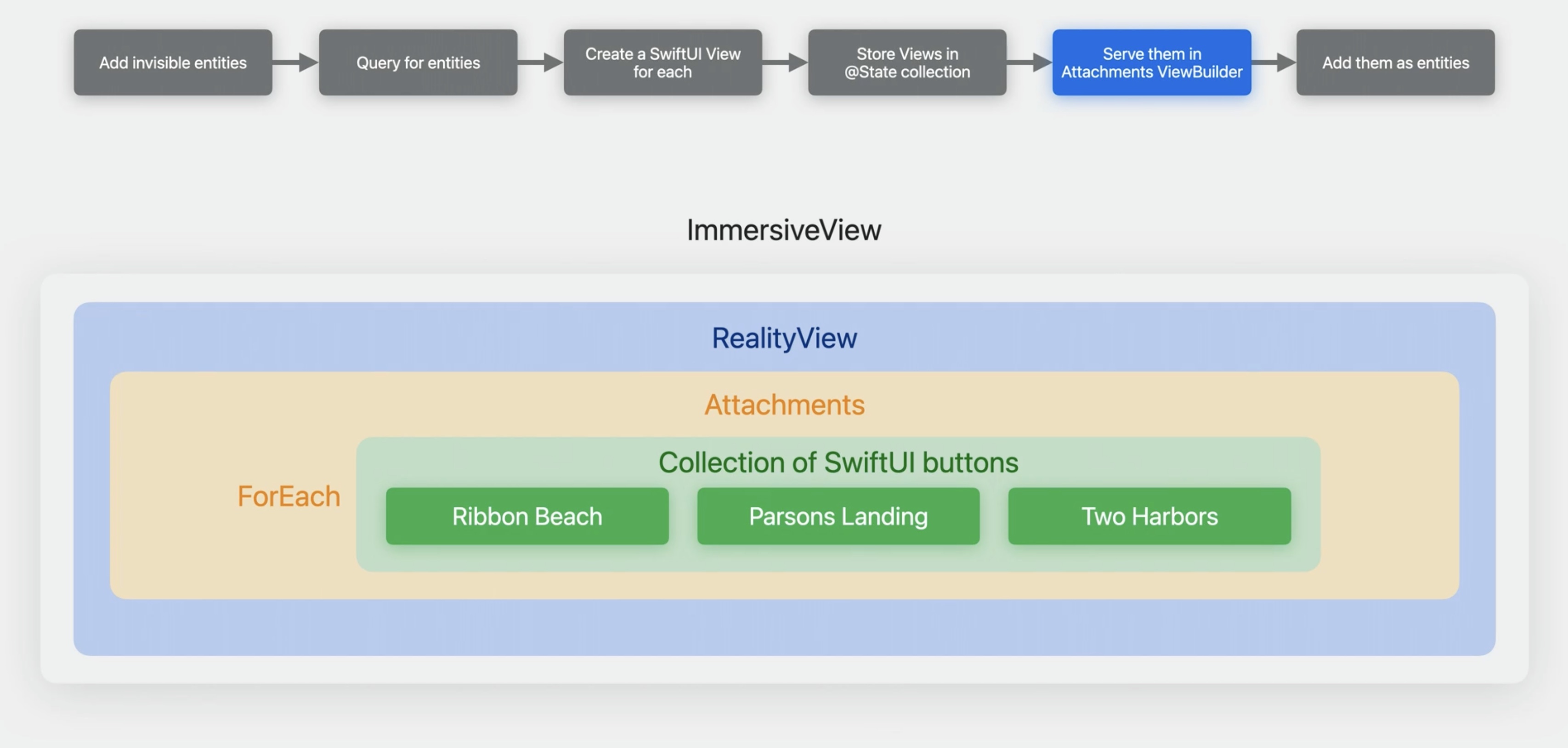

- We'll serve those buttons up to the attachments ViewBuilder.

- Add them as entities in the update closure of our RealityView, We'll add each one as a child of the marker entities we set up in Reality Composer Pro.

We're making use of the Transform Component here, which all entities have by default.

Then we add our PointOfInterestComponent to each of them. In our code, we get references to these entities by querying for all entities in the scene that have the PointOfInterestComponent on them. The query returns the three invisible entities we set up in Reality Composer Pro.

We create a new SwiftUI view for each one and store them in a collection.

To get our buttons into our RealityView, we'll make use of the SwiftUI view-updating flow. This means adding the property wrapper @State to the collection of buttons in our View. The @State property wrapper tells SwiftUI that when we add items to this collection, SwiftUI should trigger a view update on our ImmersiveView. That will cause SwiftUI to evaluate our attachments ViewBuilder and our update closure again.

The RealityView's attachments ViewBuilder is where we'll declare to SwiftUI that we want these buttons to be made into entities. Our RealityView's update closure will be called next, and our buttons will be delivered to us as entities. They're no longer SwiftUI Views now. That's why we can add them to our entity hierarchy.

In the update closure, we add our attachment entities to the scene, positioned floating above each of our invisible entities. Now they will show up visually when we look at our diorama scene.

Let's see how each of these steps is done.

First, we mark our invisible entities in our Reality Composer Pro scene.

To find our entities that we marked, we'll make an EntityQuery. We'll use it to ask for all entities that have a PointOfInterestComponent on them.

static let markersQuery = EntityQuery(where: .has (PointOfInterestComponent.self))

We'll then iterate through our QueryResult and create a new SwiftUI View for each entity in our scene that has a PointOfInterestComponent on it. We'll fill it in with information we grab from the component, the data we entered in Reality Composer Pro.

That view is going to be one of our attachments, so we put a tag on it. We'll use an ObjectIdentifier rather than a fish emoji. Here's the part where we make our collection of SwiftUI Views. We'll call it attachmentsProvider since it will provide our attachments to the RealityView's attachments ViewBuilder. We'll then store our view in the attachmentsProvider.

static let markersQuery = EntityQuery(where: .has (PointOfInterestComponent.self))

@State var attachmentsProvider = AttachmentsProvider ()

rootEntity.scene?.performQuery(Self.markersQuery).forEach { entity in

guard let pointOfInterest = entity.components[PointOfInterestComponent.self] else { return }

let attachmentTag: ObjectIdentifier = entity.id

let view = LearnMoreView(name: pointOfInterest.name, description: pointOfInterest.description)

.tag(attachmentTag)

attachmentsProvider.attachments[attachmentTag]= AnyView(view)

Let's take a look at that collection type.

AttachmentsProvider has a dictionary of attachment tags to views. We type-erased our view so we can put other kinds of views in there besides our LearnMoreView. We have a computed property called sortedTagViewPairs that returns an array of tuples -- tags and their corresponding views -- in the same order every time. Then, in the attachments ViewBuilder, we'll ForEach over our collection of attachments that we made.

This tells SwiftUI that we want one view for each of the pairs we've given it, and we provide our views from our collection. We're letting the ObjectIdentifier do double duty here as both an attachment tag for the view, and as an identifier for the ForEach structure.

@Observable final class AttachmentsProvider {

var attachments: [ObjectIdentifier: AnyView] = [:]

var sortedTagViewPairs: [(tag: ObjectIdentifier, view: AnyView)] { ... }

}

...

@State var attachmentsProvider = AttachmentsProvider()

RealityView { _, _ in

} update: { _, _ in

} attachments: {

ForEach(attachmentsProvider.sortedTagViewPairs, id: \.tag) { pair in

pair.view

}

}

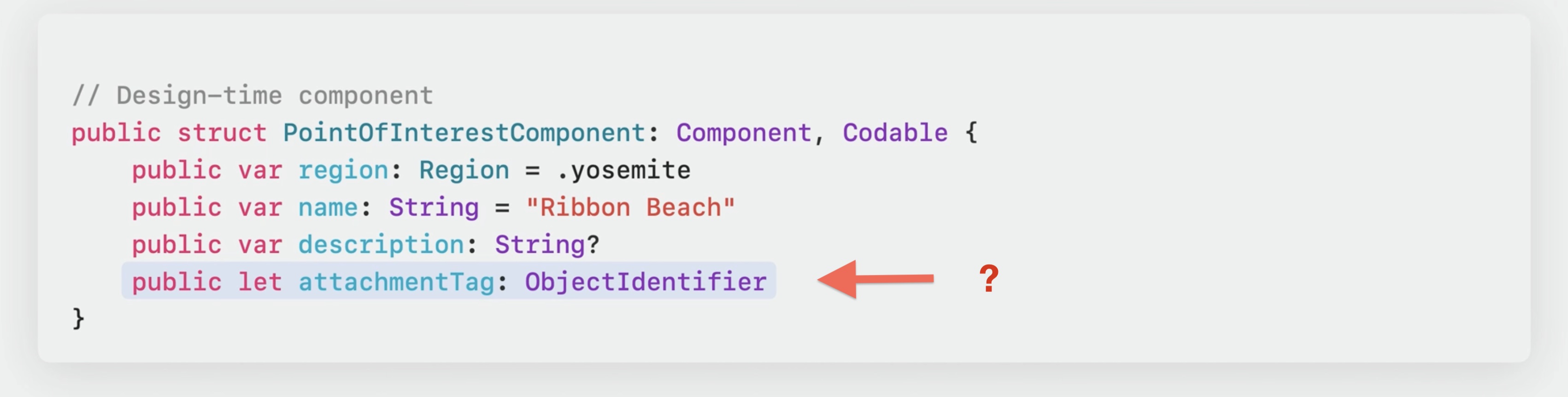

why didn't we just add a tag property to our PointOfInterestComponent instead?

Attachment tags need to be unique, both for the ForEach struct and the attachments mechanism to work. And since all the properties in our custom component will be shown in Reality Composer Pro's Inspector Panel when we add the component to an entity, that means the attachmentTag would show up there too.

Entities conform to the Identifiable protocol, so they have identifiers that are unique automatically. We can get this identifier at runtime from the entity.

To have the attachmentTag property not show up in Reality Composer Pro, we use a technique called "design-time versus runtime components."

We'll separate our data into two different components, one for design-time data that we want to arrange in Reality Composer Pro, and one for runtime data that we will attach to those same entities dynamically at runtime.

This is for properties that we don't want to show in our Inspector Panel in Reality Composer Pro. So we'll define a new component, PointOfInterestRuntimeComponent, and move our attachment tag inside it.

// Design-time component

public struct PointOfInterestComponent: Component, Codable {

public var region: Region = .yosemite

public var name: String = "Ribbon Beach"

public var description: String?

}

// Run-time component

public struct PointOfInterestRuntimeComponent: Component {

public let attachmentTag: ObjectIdentifier

}

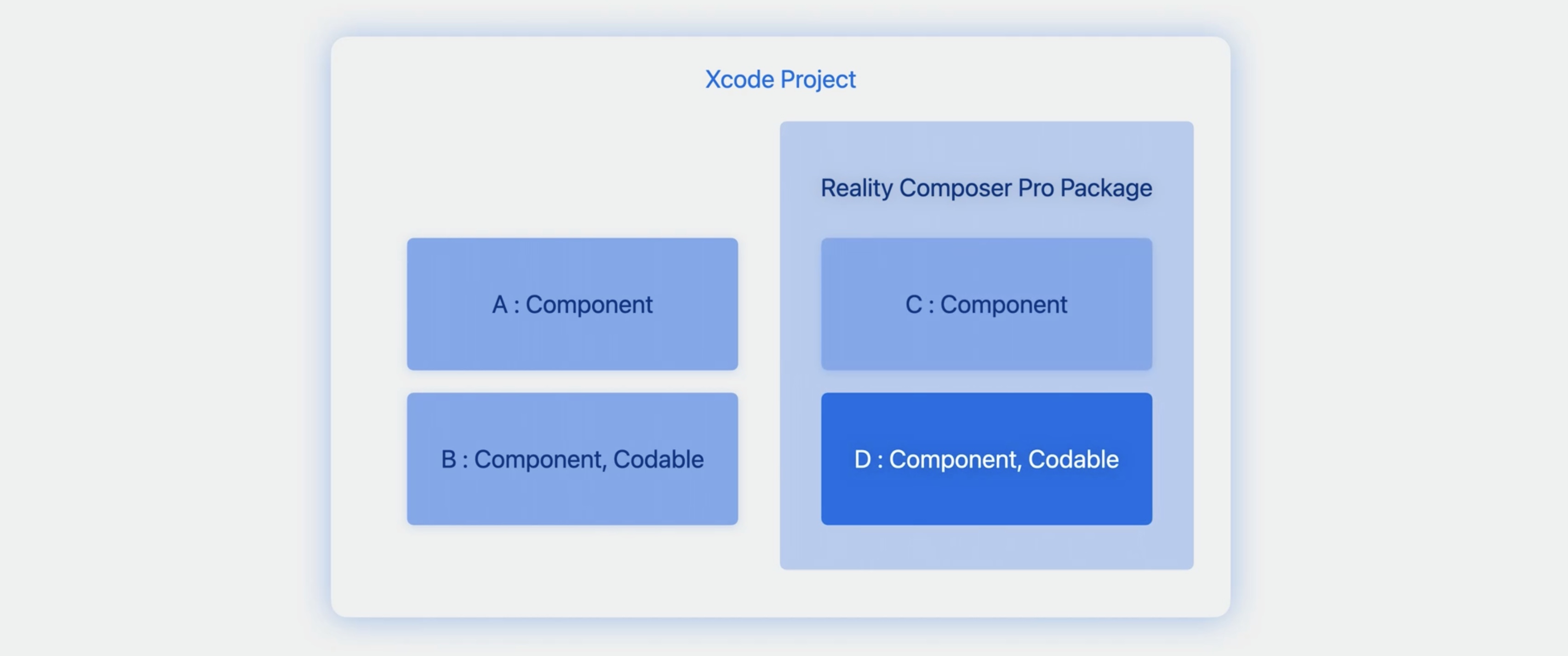

Custom components in Reality Composer Pro

So we'll define a new component, PointOfInterestRuntimeComponent, and move our attachment tag inside it. Reality Composer Pro automatically builds the component UI for you based on what it reads in your Swift package.

It inspects the Swift code in your package and makes any codable components it finds available for you to use in your scenes. Here we're showing four components. Components A and B are in our Xcode project, but they are not inside the Reality Composer Pro package, so they won't be available for you to attach to your entities in Reality Composer Pro.

Component C is inside the package but it is not codable, so Reality Composer Pro will ignore it. Of the four components shown here, only Component D will be shown in the list in Reality Composer Pro because it is within the Swift package and it is a codable component.

That one is our design-time component, while all the others may be used as runtime components.

Design-time components are for housing simpler data, such as ints, strings, and SIMD values, things that 3D artists and designers will make use of.

We will see an error in your Xcode project if you add a property to your custom component that's of a type that Reality Composer Pro won't serialize.

We'll first add our PointOfInterest runtime component to our entity and then use the runtime component to help us match up our attachment entities with their corresponding points of interest on the diorama.

Here's where our runtime component comes in. We're reading in our PointOfInterest entities and creating our attachment views. We queried for all our design-time components, and now we'll make a new corresponding runtime component for each of them. We store our attachmentTag in our runtime component, and we store our runtime component on that same entity. In this way, the design-time component is like a signifier. It tells our app that it wants an attachment made for it.

The runtime component handles any other kinds of data we need during app execution, but don't want to store in the design-time component.

static let markersQuery = EntityQuery(where: .has(PointOfInterestComponent.self))

@State var attachmentsProvider = AttachmentsProvider()

rootEntity.scene?.performQuery(Self.markersQuery).forEach { entity in

guard let pointOfInterest = entity.components[PointOfInterestComponent.self] else { return }

let attachmentTag: ObjectIdentifier = entity.id

let view = LearnMoreView(name: pointOfInterest.name, description: pointOfInterest.description)

.tag(attachmentTag)

attachmentsProvider.attachments[attachmentTag] = AnyView(view)

let runtimeComponent = PointOfInterestRuntimeComponent(attachmentTag: attachmentTag)

entity.components.set(runtimeComponent)

}

In our RealityView, we have one more step before we see our attachment entities show up in our scene. Once we've provided our SwiftUI Views in the attachments ViewBuilder, SwiftUI will call our RealityView's update closure and give us our attachments as RealityKit entities.

But if we just add them to the content without positioning them, they'll all show up sitting at the origin of the scene, position 0, 0, 0.

That's not where we want them. We want them to float above each point of interest on the terrain. We need to match up our attachment entities with our invisible point of interest entities that we set up in Reality Composer Pro.

The runtime component we put on the invisible entity has our tag in it. That's how we'll match up which attachmentEntity goes with each point of interest entity. We query for all our PointOf InterestRuntimeComponents, we get that runtime component from each entity returned by the query, then we use the component's attachmentTag property to get our attachmentEntity from the attachments parameter to the update closure.

Now we add our attachmentEntity to the content and position it half a meter above the point of interest entity.

static let runtimeQuery = EntityQuery(where: .has(PointOfInterestRuntimeComponent.self))

RealityView { _, _ in

} update: { content, attachments in

rootEntity.scene?.performQuery(Self.runtimeQuery).forEach { entity in

guard let component = entity. components[Point0fInterestRuntimeComponent.self],

let attachmentEntity = attachments.entity(for: component.attachmentTag) else {

return

} content.add(attachmentEntity)

attachmentEntity.setPosition([O, 0.5, 0], relativeTo: entity)

}

} attachments: {

ForEach(attachmentsProvider.sortedTagViewPairs,id:\.tag) { pair in

pair.view

}

}

Let's run our app again...

Play audio

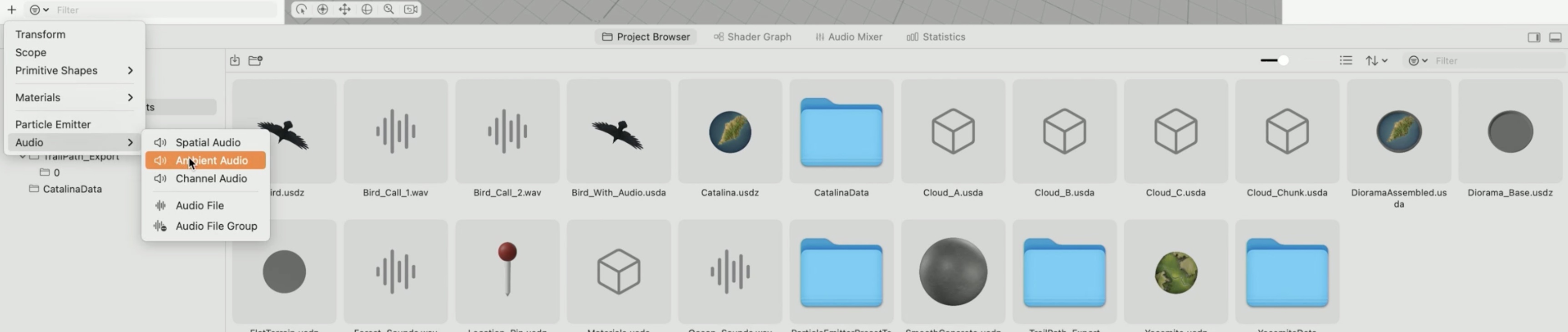

To set up something that plays audio in Reality Composer Pro, we can bring in an audio entity by clicking the plus button, selecting Audio, and then selecting Ambient Audio.

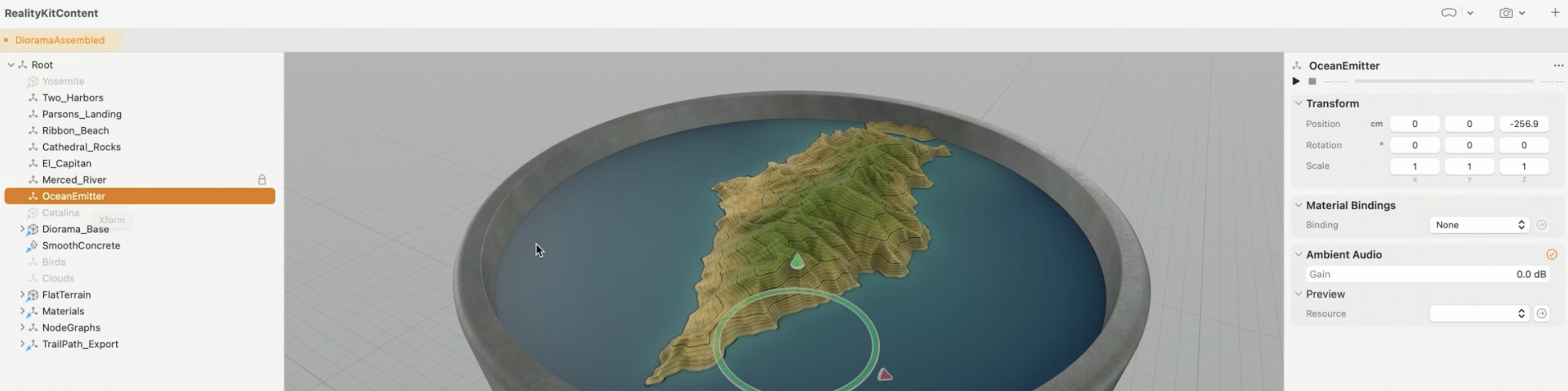

This creates a regular invisible entity with an AmbientAudioComponent on it. Let's name it OceanEmitter. It will be used to play ocean sounds for Catalina Island.

You need to add an audio file.

We can preview audio component by selecting a sound in the Preview menu of the component in the Inspector Panel, but this won't automatically play the selected sound when the entity is loaded in the app.

For that, we need to load the audio resource and tell it to play. To play this sound, we'll get a reference to the entity that we put the audio component on. We've named our entity OceanEmitter, so we'll find our entity by that name. We load the sound file using the AudioFileResource initializer, passing it the full path to the audio file resource prim in our scene. We give it the name of the .usda file that contains it in our Reality Composer Pro project. In our case, that's our main scene named DioramaAssembled.usda.

We create an audioPlaybackController by calling entity.prepareAudio so we can play, pause, and stop this sound.

func playOceanSound() {

guard let entity = entity.findEntity(named: "OceanEmitter"),

let resource = try? AudioFileResource(named: "/Root/Resources/Ocean_Sounds_wav",

from: "DioramaAssembled.usda",

in: RealityContent.realityContentBundle) else { return }

let audioPlaybackController = entity.prepareAudio(resource)

audioPlaybackController.play ()

Now, we're going to crossfade between two audio sources. We add a forest audio emitter the same way we added our ocean emitter entity.

Material properties

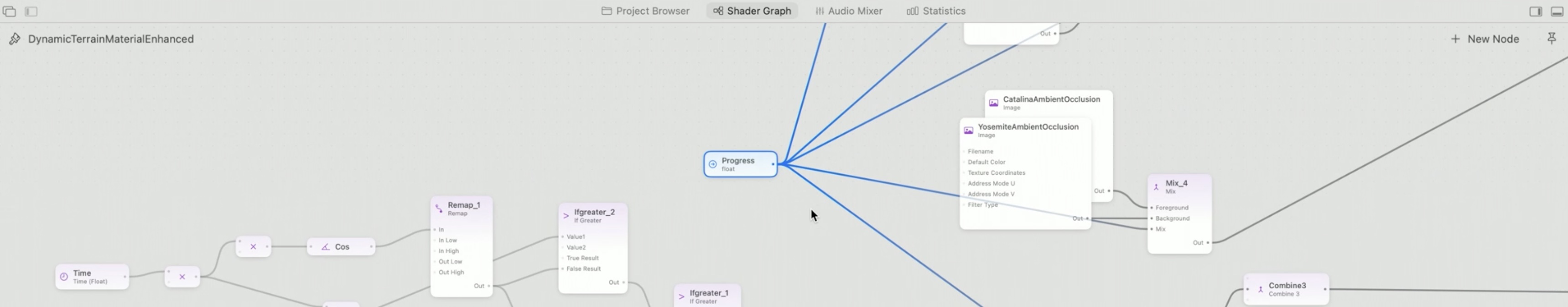

How we're morphing our terrain using the slider, and including audio in this transition. We'll use a property from our Shader Graph material to morph between the two terrains. Let's see how we do that.

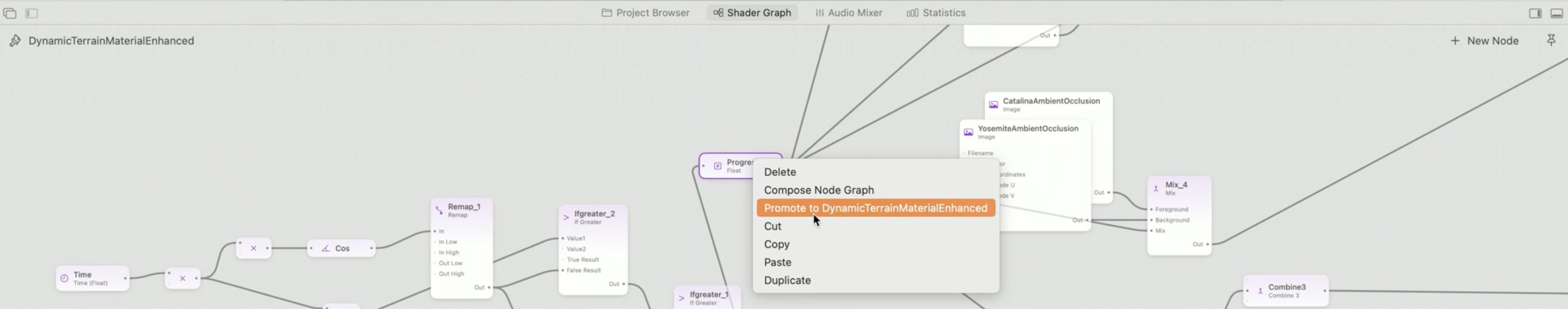

In the other session, the Apple engineer Niels, created a geometry modifier using Reality Composer Pro's Shader Graph. We can hook it up to our scene and drive some of the parameters at runtime. We want to connect this Shader Graph material with a slider. To do that, we need to promote an input node. Command-click on the node and select Promote.

This tells the project that we intend to supply data at runtime to this part of the material. We'll name this promoted node Progress, so we can address it by that name at runtime.

We can now dynamically change this value in code. We get a reference to the entity that our material is on. Then we get its ModelComponent, which is the RealityKit component that houses materials. From the ModelComponent, we get its first material.

There's only one on this particular entity. We cast it to type ShaderGraphMaterial. Now, we can set a new value for our parameter with name Progress. Finally, we store the material back onto the ModelComponent, and the ModelComponent back onto the terrain entity.

guard let terrain = rootEntity.findEntity(named: "DioramaTerrain"),

var modelComponent = terrain.components[ModelComponent.self,

var shaderGraphMaterial = modelComponent.materials.first

as? ShaderGraphMaterial else { return }

do {

try shaderGraphMaterial.setParameter(name: "Progress", value: .float(sliderValue))

modelComponent.materials = [shaderGraphMaterial]

terrain.components.set(modelComponent)

} catch { }

Now we'll hook that up to our SwiftUI slider. Whenever the slider's value changes, we'll grab that value, which is in the range 0 to 1, and feed it into our ShaderGraphMaterial.

@State private var sliderValue: Float = 0.0

Slider (value: $sliderValue, in: (0.0)... (1.0))

.onChange (of: sliderValue) { _, _ in

guard let terrain = rootEntity.findEntity(named: "DioramaTerrain"),

var modelComponent = terrain.components[ModelComponent.self,

var shaderGraphMaterial = modelComponent.materials.first

as? ShaderGraphMaterial else { return }

do {

try shaderGraphMaterial.setParameter(name: "Progress", value: .float(sliderValue))

modelComponent.materials = [shaderGraphMaterial]

terrain.components.set(modelComponent)

} catch { }

}

}

Crossfading between the ambient audio tracks for the two terrains.

Because we've also put an AmbientAudioComponent on our two audio entities, ocean and forest, we can adjust how loud the sound plays using the gain property on them.

We'll query for all entities -- all two at this point, our ocean and our forest -- that have the AmbientAudioComponent on them. Plus, we added another custom component called RegionSpecificComponent so we can mark the entities that go with one region or the other. We get a mutable copy of our audioComponent because we're going to change it and store it back onto our entity.

We call a function that calculates what the gain should be given a region and a sliderValue. We set the gain value onto the AmbientAudioComponent, and then we store the component back onto the entity.

@State private var sliderValue: Float = 0.0

static let audioQuery = EntityQuery(where: .has(RegionSpecificComponent.self)

&& .has (AmbientAudioComponent.self))

Slider (value: $sliderValue, in: (0.0)... (1.0))

.onChange (of: sliderValue) { _, _ in

// ... Change the terrain material property ...

rootEntity?.scene?.performQuery(Self.audioQuery).forEach({audioEmitter in

guard var audioComponent = audioEmitter.components[AmbientAudioComponent.self],

let regionComponent = audioEmitter.components[RegionSpecificComponent.self]

else { return }

let gain = regionComponent.region.gain(forSliderValue: sliderValue)

audioComponent.gain = gain

audioEmitter.components.set(audioComponent)

})

}

}

In action:

Moving the slider, we can see our Shader Graph material changing the geometry of the terrain map, and we can hear the forest sound fading out and the ocean sound coming in.

Wrap Up

- Use Reality Composer Pro to prepare RealityKit content

- Place attachment placeholders in Reality Composer Pro

- Load and play audio

- Drive material properties

Resources

Have a question? Ask with tag wwdc2023-10273

Search the forums for tag wwdc2023-10273

Check out also

Build spatial experiences with RealityKit - WWDC23

Develop your first immersive app - WWDC23

Enhance your spatial computing app with RealityKit - WWDC23

Explore materials in Reality Composer Pro - WWDC23

Meet Reality Composer Pro - WWDC23

Dive into RealityKit 2 - WWDC21

Twitter

Twitter

GitHub

GitHub

iosdev.space

iosdev.space